In courses on fiction and literature in college, my teachers emphasized the difference between implicit and explicit meanings and the importance of understanding the difference in order to derive the full meaning from what I was reading. In technical documentation about specifications and standards, the distinction between the two terms is even more important. Mismatches between the engineering reader’s level of understanding and that of the expert writing the standard complicates the problem.

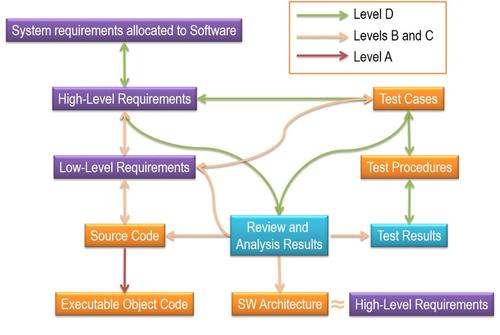

If the writer assumes too much about the reader’s level of expertise, s/he will get into the habit of communicating in technical shorthand, risking a mismatch in expertise level. I have found that is often the case in such highly technical and nuanced areas such as specifications for high reliability and safety standards such as DO-178. What was implicitly assumed in version DO-178B has become explicit in the DO-178C.

Except for only the rarest of use case developers, in developing software for traditional single cores that had to meet the older DO-178B it was safest and fastest to do certification according to what was explicitly required by the standard. In the transition to multicore designs things got much more complicated, and that assumption began to break down. In such an environment the program has to be broken down into well-defined modular functional units with interfaces that are as unambiguous as possible. Implicit in this methodology is the need to test all of the software modules carefully to make sure they all come together and interact properly.

One place where depending only on DO-178B’s explicit requirements has caused a lot of problems is in relation to control and data coupling, which are particularly problematic in multicore designs. Control coupling is where one software module sends data to another module in order to influence or direct its behavior. Data coupling is where one software module simply sends information to another one but does not place any requirement on the receiving module, nor expect some sort of return action.

Many multicore developers started having problems with getting their designs through the certification process. For example, they did not realize that they had to demonstrate that the software modules and their couplings interacted only in the ways specified in their original design – not explicit in DO-178B, but implicit. For the same reason, even though they followed the explicit rules in 178B, they were unable to demonstrate that unplanned anomalous or erroneous actions were not possible.

That confusion has been cleared up in the newer DO-178C. Designed as the standard for software used in civil airborne systems, now explicitly requires that control and data coupling assessments be performed on safety-critical software to ensure design, integration, and test objectives are met.

This requires developers to carefully measure control and data coupling using a detailed combination of control and data flow analysis. This is a difficult enough process in single core designs, but borders on impossible in some mil-aero multicore designs and requires a new approach to doing that analysis.

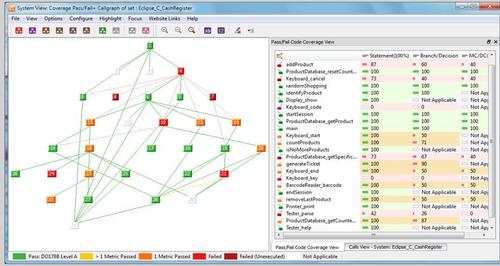

One of the best tools to do this tough job is the newest Version 9.5 of the LDRA Tool Suite with its new improved "Uniview." This is a sophisticated graphical tool for observing all of the software components and artifacts in a multicore design and providing requirements traceability relating to system interdependencies and behavior.

I am a sucker for graphical approaches to solving almost any problem. Even in high school, instead of using the traditional mathematical techniques for solving math and physics problems, I tried to find a way to come up with a graphical representation of the problem. I found that I not only could get the right answers faster, but I came away with a better understanding of the nature of the problem I was dealing with.

I find that is also true with LDRA's Version 9.5. The improved Uniview capabilities include not only traditional code coverage but the tracking of data and control coupling. On the control coupling side, it allows a developer to perform flow analysis on both a program's calling hierarchy as well as individual procedures and see the results instantly, showing which control functions are invoked by others and how.

On the data flow analysis side, I am impressed by the way it follows variables through the source code, performing checks at both the procedure level and at the system-level. A developer gets an ongoing report on any anomalous behavior, an important part of a data coupling assessment. The graphical framework makes it much easier to see data dependencies between modules, considerably speeding up the verification of all data and control coupling paths.

This capability may be a big plus in complex and demanding heterogeneous multicore designs, beyond safety-critical ones such as DO-178. In such applications there are numerous cores, and their software module interactions are increasingly complex. Even in the current generation of mobile devices there is a mix of five or six to a dozen processors of various types: general purpose CPUs, graphics processing units, and digital signal processors. And the number and diversity is continuing to climb.

In such an environment, structured design using software modules with clear and unambiguous data and control coupling will be forced on developers. To get a design that simply works, let alone meet an imposed standard, developers will have to structure their software code into clearly defined functional modules that can operate as a cohesive system across all processors in the system. However, when you go modular, even in non-safety-critical designs it will be necessary to examine closely the way the software blocks come together and interact. And to do that effectively a graphical approach such as the one incorporated into LDRA's tools may be the only way to go.

/5

/5

文章评论(0条评论)

登录后参与讨论