My chum, Rich Quinnell, recently wrote an article titled: Embedded systems survey uncovers trends & concerns for engineers. Based on this, Bob Snyder emailed Rich some very interesting comments and questions.

Rich brought me into the conversation and -- with Bob's permission -- we decided I should post this blog presenting his comments and questions, and soliciting input from the community. So here is Bob's message:

Richard,

I really appreciate the hard work that the folks at UBM do to provide the embedded survey results.

It seems as though non-real-time, soft real-time, and hard real-time applications have very different requirements. I have been closely studying the survey results for many years and trying to understand (or imagine) how the responses to some of the questions might be correlated.

For example, it would be nice to know how the overall movement to 32-bit MCUs (most of which have cache) breaks down by application (e.g., non-real-time, soft real-time, and hard real-time).

Are popular 32-bit MCUs, such as ARM and MIPS, being widely adopted for hard real-time applications where worst-case execution time is at least as important as average-case execution time, and where jitter is often undesirable? If so, do people disable the cache in order to achieve these goals, or simply throw MIPS at the problem and rely upon statistical measures of WCET and jitter?

Performance penalty of completely disabling the cache

Microchip's website explains how to completely disable the cache on a PIC32MZ (MIPS 14K core). The article says that doing this will reduce performance by a factor of ten: "You probably don't want to do this because of lower performance (~ 10x) and higher power consumption."

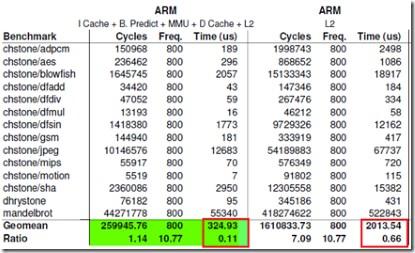

Somebody at the University of Toronto ran a large set of benchmarks comparing various configurations of a specific ARM processor. When they compared a fully-enabled cache configuration to an L2-only configuration, the L2-only setup was six times slower (shown in the red rectangles below). It seems reasonable to assume that if L2 had also been disabled, performance would have been even worse.

Based upon this data, it seems reasonable to conclude that when the cache is completely disabled on a 32-bit micro, the average performance is roughly ten times worse than with the cache fully enabled.

Why would anyone use a cache-based MCU in a hard real-time application?

The fastest PIC32 processor (the PIC32MZ) runs at 200 MHz. With the cache fully disabled, it would effectively be running at 20 MHz. The 16-bit dsPIC33E family runs at 70 MHz with no cache. Admittedly, the dsPIC will need to execute more instructions if the application requires arithmetic precision greater than 16 bits. But for hard real-time applications that can live with 16-bit precision, the dsPIC33E would seem to be the more appropriate choice.

I am having trouble understanding the rationale for using an ARM or PIC32 in a hard real-time application. These chips are designed with the goal of reducing average-case execution time at the expense of increased worst-case execution time and increased jitter. When the cache is disabled, they appear to have worse performance than devices that are designed without cache.

Atmel's 32-bit AVR UC3 family has neither an instruction cache nor a data cache, so this is not a 32-bit issue per se. But it seems that the majority of 32-bit MCUs do have cache and are targeted at soft real-time applications such as graphical user interfaces and communication interfaces (e.g. TCP/IP, USB) where WCET and jitter are not major concerns.

Breakdown by market segment

It seems to me that there will always be a large segment of the market (e.g., industrial control systems) where hard real-time requirements would mitigate against the use of a cache-based MCU.

It would be interesting to see the correlation between choice of processor (non-cached vs. cached, or 8/16 vs 32 bits) and the application area (soft real-time vs. hard real-time, or GUI/communications vs. industrial control). I wonder if it would be possible to tease that out of the existing UBM survey data.

Looking at the 2014 results

With regard to the question: "Which of the following capabilities are included in your current embedded project?" We see that over 60 percent of projects include real-time capability. The question does not attempt to distinguish between hard and soft real-time. And the 8/16/32-bit MCU question does not distinguish between cached and non-cached processors. Nevertheless, it might be interesting to see how the 8/16/32 bit responses correlate with the real-time and non-real-time responses, or signal-processing responses. I find it hard to believe that a large number of projects are using cached 32-bit processors for hard real-time applications.

It is interesting to note that every response for the capabilities question shows a falling percentage between 2010 and 2014. This suggests that other categories may be needed. I suppose it is possible that fewer projects required real-time capabilities in 2014, but it seems more likely that there was an increase in the number of projects that required other capabilities such as Display and Touch, which are not being captured by that question.

Thanks for considering my input, Bob Snyder.

Well, it's certainly true that non-real-time, soft real-time, and hard real-time applications have different requirements, but I'm not sure how best to articulate them. Do you have expertise in this area? Do you know the answers to any of Bob's questions? How about Bob's suggestions as to how we might consider refining our questions for the 2016 survey? Do you have any thoughts here? If so, please post them in the comments below.

/5

/5

文章评论(0条评论)

登录后参与讨论