Mobile phone companies have been pushing smartwatches as a way to pump up a saturated and declining market, but there are good reasons to resist the marketing hype and not buy one. According to a report titled "Internet of Things Security Study: Smartwatches" just released by HP Fortify, as serious as security vulnerabilities have been on smartphones, they may be worse on smartwatches.

The Fortify team tested 10 Android- and Apple iOS-based devices and found that all contained significant vulnerabilities, including insufficient authentication, lack of encryption, and privacy concerns. Included in the testing were Android, iOS cloud, and mobile application components. As result of these findings, Jason Schmitt, general manager, HP Security, Fortify, questions whether smartwatches are designed adequately to store and protect the sensitive data and tasks they are built to process.

Duh! This is not new information, especially in relation to Android based designs. Reports going back over several years have revealed multiple security flaws and hacks on smartphones, especially Android-based ones. So how can we expect anything better on smartwatches?

But the results of the Fortify tests tell me that the situation is worse than I expected. The team found serious problems falling into five broad categories:

Insufficient user authentication/authorization

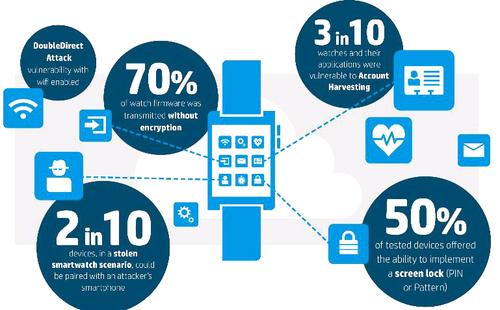

Every smartwatch tested was paired with a mobile interface that lacked two-factor authentication and the ability to lock out accounts after failed password attempts. Thirty percent of the units tested were vulnerable to account harvesting, meaning an attacker could gain access to the device and data due to a combination of weak password policy, lack of account lockout, and user enumeration. As on smartphones, the smartwatches tested allow users to upload their name and phone number address book to a server on the cloud, which then returns (enumerates) a subset of the user's contacts that are also using the service. Hackers can then use this enumeration list to derive useful information about the user’s device, such as the operating system to perform system specific attacks.

Lack of transport encryption

While 100 percent of the test products implemented transport encryption using SSL/TLS, 40 percent of the cloud connections make use of weak security cyphers and are vulnerable to open source secure sockets layer-based POODLE attacks due to their continued use of SSL v2.

Insecure interfaces

Thirty percent of the tested smartwatches used cloud-based web interfaces had security problems, the most serious of which had to do with account enumeration.

Insecure software/firmware

70 percent of the smartwatches had problems with protection of firmware updates, including transmitting firmware updates without encryption. While many updates were ‘signed’ to help prevent the installation of contaminated firmware, the lack of encryption on the devices raised the possibility that the files could be downloaded and analyzed.

Privacy concerns

All of the smartwatches tested, similar to their smartphone big brothers, collected some form of personal information, such as name, address, date of birth, weight, gender, heart rate and other health information, with little thought given to account enumeration issues, as well as continuing the use of weak passwords on some products.

The Fortify report offers some suggestions for correcting these problems. On the provider side, they suggest:

- Ensure that transport layer security (TLS) implementations are configured and implemented properly

- Protect user accounts and sensitive data by requiring strong passwords

- Implement controls to prevent man-in-the-middle attacks

- Avoid using apps that require access to the cloud for operation; instead provide as many as possible on the smartwatch device

But much of the burden still falls on the end user. Fortify’s report suggests that anyone using a smartwatch protect themselves by doing the following:

- Don't enable sensitive access control functions (e.g., car or home access) unless strong authentication is offered

- Enable security functionality (e.g., passcodes, screen locks, and encryption).

- For any interface such as mobile or cloud applications associated with your watch, make sure that strong passwords are used

- Do not approve any unrecognized pairing requests.

The Fortify report does not identify specifically which devices failed or what OSes were used on each. But based on Android’s abysmal security record on smartphones, I’m pretty certain that most of the smartwatches that failed were based on the Android platform. I would like to think that the Android-based smartwatches were using Version 5.1.1 (Lollipop) or a later version with their additional security permissions capabilities. But given the poor performance of most of the smartwatches in these tests that is a risky assumption to make.

I also suspect that there were few failures on Apple iOS-based smartwatches. This speculation is based on three factors that I think could affect security. One is the difference between proprietary and open source operating systems and the degrees of control a company can impose on those who build apps for their OS. Second, the degree to which platform providers have been incorporating security features into their OS and, third, the degree to which they can force developers to adhere to the rules they have set down.

Apple's iOS is proprietary and all apps are written to an internal set of application programming interfaces. To qualify apps to run in the iOS environment, developers usually go through a rigorous set of procedures. By contract, adherence to Apple’s requirements is enforced by the threat of lawsuits and fines.

Google's Android, however, is an odd mix of open source and proprietary components. Android is an open source distribution of Linux OS with a Java-based API wrapper, neither of which originally were designed with security in mind. While Linux can be made secure, it’s an expensive process so it is only done where the application environment requires it.

For any open source platform, hardware vendors download a distribution to which they can make additions or subtract features outside the basic core elements. Because of the mixed open source/proprietary nature of Android, there do not seem to be any means by which Google has the same kind legal or institutional muscle that Apple can impose. As far as I can see, any security enhancements that Google and the associated developer community have come up with are only recommendations, and not easily enforceable.

So if you are going to buy a smartwatch, I suggest using only those features that do not involve connecting with the outside world, such as using it to see what time it is. Unfortunately, other than that, it’s getting harder to determine if and when that occurs. Because of limited power, memory, and compute resources, more and more apps you download to your Android-based smartwatch are not really installed on your smartwatch but reside in the cloud somewhere, even some you would assume in no way involve connectivity.

/2

/2

文章评论(0条评论)

登录后参与讨论