My first job fresh from college was testing and debugging control loading systems on flight simulators for 747 and the "new" 767 airliners. In the late 1970s, the control loop that simulated the feel of the primary flight surfaces, and had to respond instantaneously to pilot inputs, was purely analogue; the digital portion consisted of a 32-bit Gould SEL "super minicomputer" with schottky TTL and a screamingly-fast 6.67 MHz system clock; it merely provided voltage inputs via DACs into an op amp summing junction which represented slowly-changing parameters such as airspeed and pitch angle.

"Op Amp", of course, is short for "Operational Amplifier", first developed to perform mathematical operations in analogue computing. Forget about FFTs, DSPs, and all that nonsense: back in the mid-70s (when analogue giants walked the earth and the Intel 4004 was barely out of diapers) there was an analogue IC for just about everything – multiplication and division, log and antilog operations, RMS-DC conversion, you name it.

With the later rise of the Dark Side, of course, many of those old analogue components, as well as the companies that gave them life, have breathed their last.

At least some of those functions survive, either as standalone parts or (sigh) as microcontroller functional blocks. And the real world, thankfully, remains stubbornly analogue, which means that most of the truly interesting "digital" problems are really analogue problems – grounding, crosstalk, race conditions, noise, EMC, etc.

We humans are products of the real world, too. Are we analogue or digital?

The information that makes up a unique human being is mostly to be found in two places, in our genes and in our brains. The information in genes can be considered digital, coded in the four-level alphabet of DNA. Although the human brain is often referred to as an analogue computer, and is often modeled by analogue integrated circuits, the reality is more nuanced.

In a fascinating discussion on this subject, computational neuroscientist Paul King states that information in the brain is represented in terms of statistical approximations and estimations rather than exact values. The brain is also non-deterministic and cannot replay instruction sequences with error-free precision; those are analogue features.

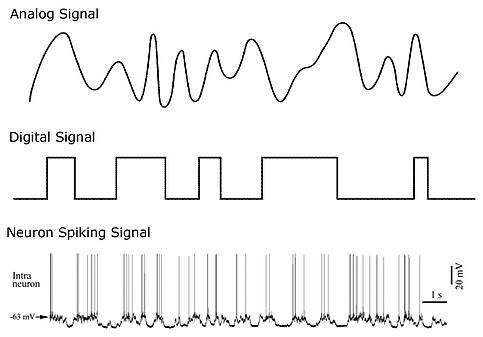

Figure 1: Analog, digital, and neuron spiking signals (source:Quora)

On the other hand, the signals sent around the brain are "either-or" states that are similar to binary. A neuron fires or it does not, so in that sense, the brain is computing using binary signals.

The precise mechanism of memory formation and retention, though, remains a mystery and may also have both analogue and digital components.

Is life itself analogue or digital? Freeman Dyson, the world-renowned mathematical physicist who helped found quantum electrodynamics, writes about a long-running discussion with two colleagues as to whether life could survive for ever in a cold expanding universe. Their consensus is that life cannot survive forever if life is digital, but life may survive for ever if it's analog.

What of my original topic - analogue vs digital computation? In a book published in 1989, Marian Pour-El and Ian Richards, two mathematicians at the University of Minnesota, proved in a mathematically precise way that analogue computers are more powerful than digital computers. They give examples of numbers that are proved to be non-computable with digital computers but are computable with a simple kind of analogue computer.

Consider a classical electromagnetic field obeying Maxwell's equations: Pour-El and Richards show that the field can be focused on a point in such a way that the strength of the field at that point is not computable by any digital computer, but it can be measured by a simple analogue device.

The book is available for free download. Digital engineers, knock yourself out.

Meanwhile, analogue rules supreme. Which, of course, we analog engineers knew all along.

Now, about that raise.....

/4

/4

文章评论(0条评论)

登录后参与讨论