应用场景

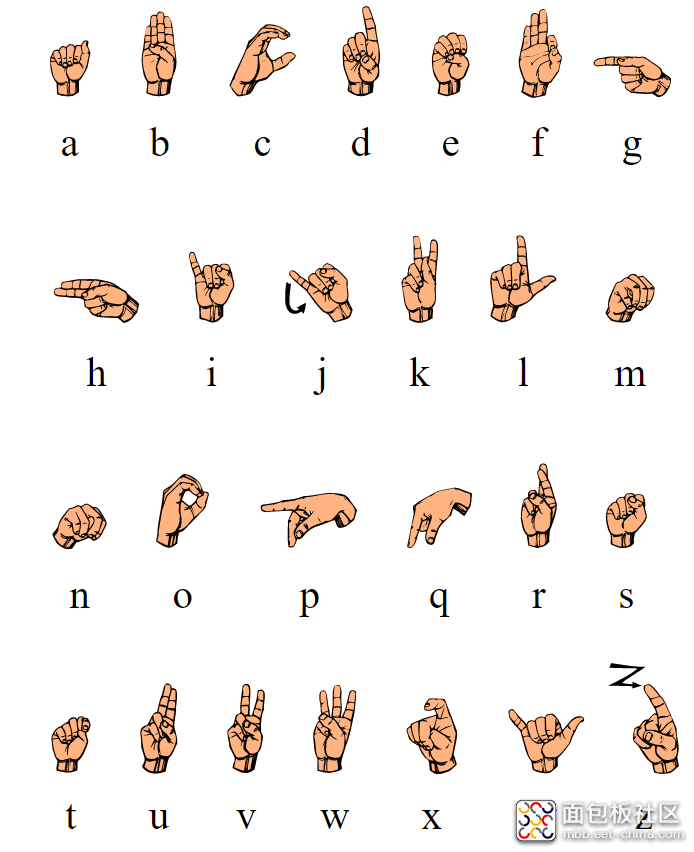

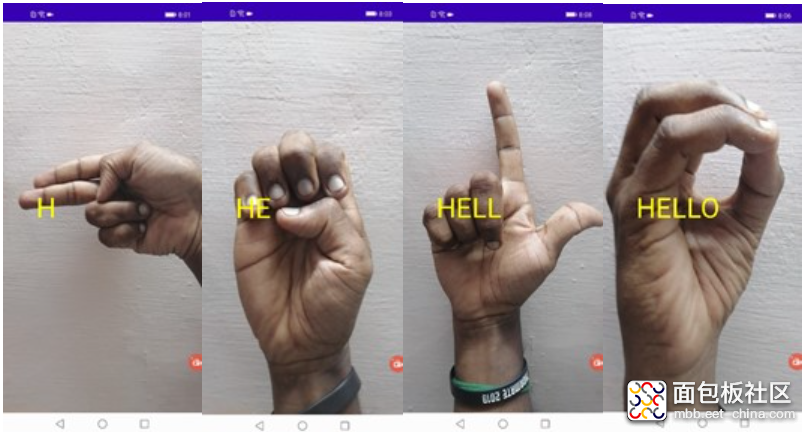

手语通常被听力和口语有障碍的人来使用,是收集手势包含日常互动中所使用的动作和手势。使用ML Kit 可以建立一个智能手语字母表识别器,它可以像一个辅助器一样将手势翻译成单词或者句子,也可以将单词或者句子翻译成手势。这里尝试的是手势当中的美国手语字母表,是基于关节,手指和手腕的位置进行分类。接下来小编将会尝试从手势中收集单词“HELLO”。

开发步骤

1. 开发准备

详细的准备步骤可以参考华为开发者联盟,这里列举关键的开发步骤。(https://developer.huawei.com/consumer/cn/doc/development/hiai-Guides/config-agc-0000001050990353?ha_source=hms1)

1.1 启动ML Kit

在华为开发者AppGallery Connect, 选择Develop > Manage APIs。确保ML Kit 激活。

1.2 项目级gradle里配置Maven仓地址

buildscript { repositories { ... maven {url 'https://developer.huawei.com/repo/'} } } dependencies { ... classpath 'com.huawei.agconnect:agcp:1.3.1.301' } allprojects { repositories { ... maven {url 'https://developer.huawei.com/repo/'} } }

复制代码apply plugin: 'com.android.application' apply plugin: 'com.huawei.agconnect' dependencies{ // Import the base SDK. implementation 'com.huawei.hms:ml-computer-vision-handkeypoint:2.0.2.300' // Import the hand keypoint detection model package. implementation 'com.huawei.hms:ml-computer-vision-handkeypoint-model:2.0.2.300' }

复制代码<meta-data android:name="com.huawei.hms.ml.DEPENDENCY" android:value= "handkeypoint"/>

复制代码<!--Camera permission--> <uses-permission android:name="android.permission.CAMERA" /> <!--Read permission--> <uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

复制代码2.1 创建用于相机预览的Surface View,创建用于结果的Surface View。

目前我们只在UI中显示结果,您也可以使用TTS识别扩展和读取结果。

mSurfaceHolderCamera.addCallback(surfaceHolderCallback) private val surfaceHolderCallback = object : SurfaceHolder.Callback { override fun surfaceCreated(holder: SurfaceHolder) { createAnalyzer() } override fun surfaceChanged(holder: SurfaceHolder, format: Int, width: Int, height: Int) { prepareLensEngine(width, height) mLensEngine.run(holder) } override fun surfaceDestroyed(holder: SurfaceHolder) { mLensEngine.release() } }

复制代码//Creates MLKeyPointAnalyzer with MLHandKeypointAnalyzerSetting.val settings = MLHandKeypointAnalyzerSetting.Factory() .setSceneType(MLHandKeypointAnalyzerSetting.TYPE_ALL) .setMaxHandResults(2) .create() // Set the maximum number of hand regions that can be detected within an image. A maximum of 10 hand regions can be detected by default mAnalyzer = MLHandKeypointAnalyzerFactory.getInstance().getHandKeypointAnalyzer(settings) mAnalyzer.setTransactor(mHandKeyPointTransactor)

复制代码该类MLAnalyzer.MLTransactor<T>接口,使用此类中的“transactResult”方法获取检测结果并实现具体业务。

class HandKeyPointTransactor(surfaceHolder: SurfaceHolder? = null): MLAnalyzer.MLTransactor<MLHandKeypoints> { override fun transactResult(result: MLAnalyzer.Result<MLHandKeypoints>?) { var foundCharacter = findTheCharacterResult(result) if (foundCharacter.isNotEmpty() && !foundCharacter.equals(lastCharacter)) { lastCharacter = foundCharacter displayText.append(lastCharacter) } canvas.drawText(displayText.toString(), paddingleft, paddingRight, Paint().also { it.style = Paint.Style.FILL it.color = Color.YELLOW }) }

复制代码LensEngine lensEngine = new LensEngine.Creator(getApplicationContext(), analyzer)setLensType(LensEngine.BACK_LENS) applyDisplayDimension(width, height) // adjust width and height depending on the orientation applyFps(5f) enableAutomaticFocus(true) create();

复制代码private val surfaceHolderCallback = object : SurfaceHolder.Callback { // run the LensEngine in surfaceChanged() override fun surfaceChanged(holder: SurfaceHolder, format: Int, width: Int, height: Int) { createLensEngine(width, height) mLensEngine.run(holder) } }

复制代码fun stopAnalyzer() { mAnalyzer.stop() }<code class="language-java"></code>

复制代码您可以使用HandKeypointTransactor类中的transtresult方法来获取检测结果并实现特定的服务。检测结果除了手部各关键点的坐标信息外,还包括手掌和每个关键点的置信值。手掌和手部关键点识别错误可以根据置信值过滤掉。在实际应用中,可以根据误认容忍度灵活设置阈值。

2.7.1 找到手指的方向

让我们先假设可能手指的矢量斜率分别在X轴和Y轴上。

private const val X_COORDINATE = 0private const val Y_COORDINATE = 1

复制代码enum class FingerDirection { VECTOR_UP, VECTOR_DOWN, VECTOR_UP_DOWN, VECTOR_DOWN_UP, VECTOR_UNDEFINED } enum class Finger { THUMB, FIRST_FINGER, MIDDLE_FINGER, RING_FINGER, LITTLE_FINGER }

复制代码var firstFinger = arrayListOf<MLHandKeypoint>()var middleFinger = arrayListOf<MLHandKeypoint>() var ringFinger = arrayListOf<MLHandKeypoint>() var littleFinger = arrayListOf<MLHandKeypoint>() var thumb = arrayListOf<MLHandKeypoint>()

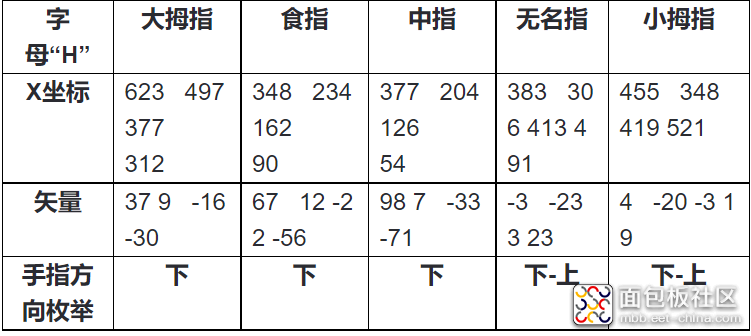

复制代码拿字母H的两个简单关键点来说:

int[] datapointSampleH1 = {623, 497, 377, 312, 348, 234, 162, 90, 377, 204, 126, 54, 383, 306, 413, 491, 455, 348, 419, 521 };int [] datapointSampleH2 = {595, 463, 374, 343, 368, 223, 147, 78, 381, 217, 110, 40, 412, 311, 444, 526, 450, 406, 488, 532};

复制代码//For ForeFinger - 623, 497, 377, 312 double avgFingerPosition = (datapoints[0].getX()+datapoints[1].getX()+datapoints[2].getX()+datapoints[3].getX())/4; // find the average and subract it from the value of x double diff = datapointSampleH1 [position] .getX() - avgFingerPosition ; //vector either positive or negative representing the direction int vector = (int)((diff *100)/avgFingerPosition ) ;

复制代码

用上述矢量方向,我们可以分类矢量,定义第一个为手指方向枚举

private fun getSlope(keyPoints: MutableList<MLHandKeypoint>, coordinate: Int): FingerDirection { when (coordinate) { X_COORDINATE -> { if (keyPoints[0].pointX > keyPoints[3].pointX && keyPoints[0].pointX > keyPoints[2].pointX) return FingerDirection.VECTOR_DOWN if (keyPoints[0].pointX > keyPoints[1].pointX && keyPoints[3].pointX > keyPoints[2].pointX) return FingerDirection.VECTOR_DOWN_UP if (keyPoints[0].pointX < keyPoints[1].pointX && keyPoints[3].pointX < keyPoints[2].pointX) return FingerDirection.VECTOR_UP_DOWN if (keyPoints[0].pointX < keyPoints[3].pointX && keyPoints[0].pointX < keyPoints[2].pointX) return FingerDirection.VECTOR_UP } Y_COORDINATE -> { if (keyPoints[0].pointY > keyPoints[1].pointY && keyPoints[2].pointY > keyPoints[1].pointY && keyPoints[3].pointY > keyPoints[2].pointY) return FingerDirection.VECTOR_UP_DOWN if (keyPoints[0].pointY > keyPoints[3].pointY && keyPoints[0].pointY > keyPoints[2].pointY) return FingerDirection.VECTOR_UP if (keyPoints[0].pointY < keyPoints[1].pointY && keyPoints[3].pointY < keyPoints[2].pointY) return FingerDirection.VECTOR_DOWN_UP if (keyPoints[0].pointY < keyPoints[3].pointY && keyPoints[0].pointY < keyPoints[2].pointY) return FingerDirection.VECTOR_DOWN } } return FingerDirection.VECTOR_UNDEFINED

复制代码xDirections[Finger.FIRST_FINGER] = getSlope(firstFinger, X_COORDINATE)yDirections[Finger.FIRST_FINGER] = getSlope(firstFinger, Y_COORDINATE )<code class="language-java"></code><code class="language-java"></code>

复制代码

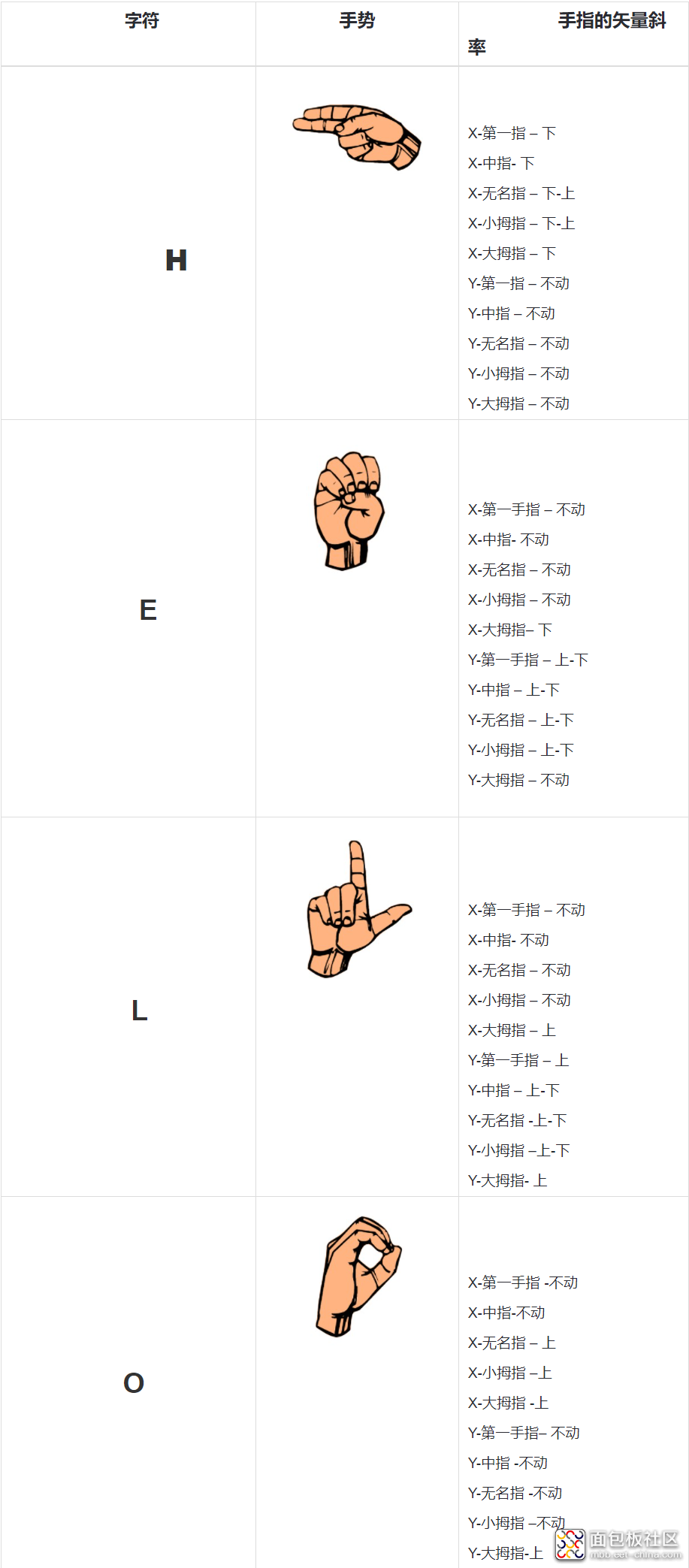

2.7.2 从手指方向找到字符:

现在我们把它当作唯一的单词“HELLO”,它需要字母H,E,L,O。它们对应的X轴和Y轴的矢量如图所示。

假设:手的方向总是竖向的。让手掌和手腕与手机平行,也就是与X轴成90度。姿势至少保持3秒用来记录字符。

开始用字符映射矢量来查找字符串

// Alphabet Hif (xDirections[Finger.LITTLE_FINGER] == FingerDirection.VECTOR_DOWN_UP && xDirections [Finger.RING_FINGER] == FingerDirection.VECTOR_DOWN_UP && xDirections [Finger.MIDDLE_FINGER] == FingerDirection.VECTOR_DOWN && xDirections [Finger.FIRST_FINGER] == FingerDirection.VECTOR_DOWN && xDirections [Finger.THUMB] == FingerDirection.VECTOR_DOWN) return "H" //Alphabet E if (yDirections[Finger.LITTLE_FINGER] == FingerDirection.VECTOR_UP_DOWN && yDirections [Finger.RING_FINGER] == FingerDirection.VECTOR_UP_DOWN && yDirections [Finger.MIDDLE_FINGER] == FingerDirection.VECTOR_UP_DOWN && yDirections [Finger.FIRST_FINGER] == FingerDirection.VECTOR_UP_DOWN && xDirections [Finger.THUMB] == FingerDirection.VECTOR_DOWN) return "E" if (yDirections[Finger.LITTLE_FINGER] == FingerDirection.VECTOR_UP_DOWN && yDirections [Finger.RING_FINGER] == FingerDirection.VECTOR_UP_DOWN && yDirections [Finger.MIDDLE_FINGER] == FingerDirection.VECTOR_UP_DOWN && yDirections [Finger.FIRST_FINGER] == FingerDirection.VECTOR_UP && yDirections [Finger.THUMB] == FingerDirection.VECTOR_UP) return "L" if (xDirections[Finger.LITTLE_FINGER] == FingerDirection.VECTOR_UP && xDirections [Finger.RING_FINGER] == FingerDirection.VECTOR_UP && yDirections [Finger.THUMB] == FingerDirection.VECTOR_UP) return "O"

复制代码

4.更多技巧和诀窍

1. 当扩展到26个字母时,误差很更多。为了更精准的扫描需要2-3秒,从2-3秒的时间寻找和计算最有可能的字符,这可以减少字母表的误差。

2. 为了能支持所有方向,在X-Y轴上增加8个或者更多的方向。首先,需要求出手指的度数和对应的手指矢量。

总结

这个尝试是强力坐标技术,它可以在生成矢量映射后扩展到所有26个字母,方向也可以扩展所有8个方向,所以它会有26*8*5个手指=1040个矢量。为了更好的解决这一问题,我们可以利用手指的一阶导数函数来代替矢量从而简化计算。

我们可以增强其它的去代替创建矢量,可以使用图像分类和训练模型,然后使用自定义模型。这个训练是为了检查华为ML Kit使用关键点处理特性的可行性。

/5

/5